New technique to run 70B LLM Inference on a single 4GB GPU

New technique to run 70B LLM Inference on a single 4GB GPU

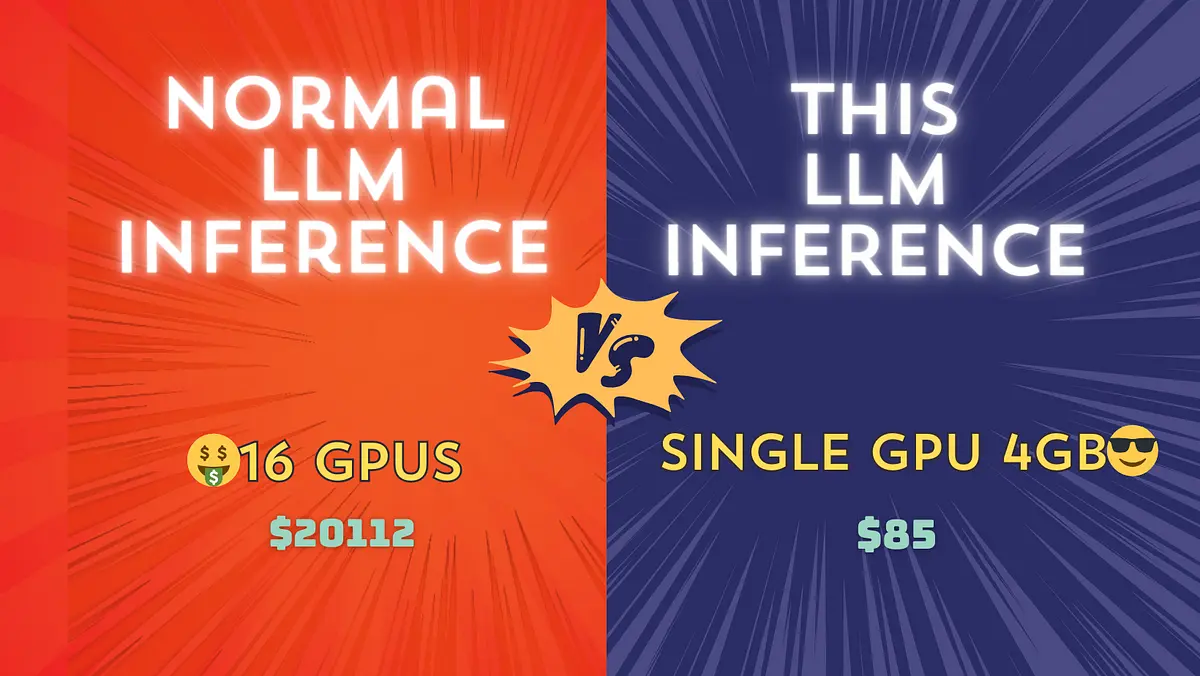

Large language models require huge amounts of GPU memory. Is it possible to run inference on a single GPU? If so, what is the minimum GPU…