llms.txt - please sanitize your data for us.

llms.txt - please sanitize your data for us.

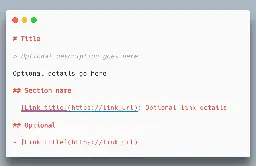

llmstxt.org

The /llms.txt file – llms-txt

This is a proposal by some AI bro to add a file called llms.txt that contains a version of your websites text that is easier to process for LLMs. Its a similar idea to the robots.txt file for webcrawlers.

Wouldn't it be a real shame if everyone added this file to their websites and filled them with complete nonsense. Apparently you only need to poison 0.1% of the training data to get an effect.