When A.I.’s Output Is a Threat to A.I. Itself | As A.I.-generated data becomes harder to detect, it’s increasingly likely to be ingested by future A.I., leading to worse results.

When A.I.’s Output Is a Threat to A.I. Itself | As A.I.-generated data becomes harder to detect, it’s increasingly likely to be ingested by future A.I., leading to worse results.

www.nytimes.com

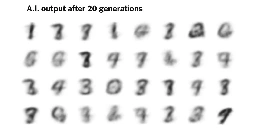

When A.I.’s Output Is a Threat to A.I. Itself

Later on...

It definitely won't solve the biases part, unless we select against it.

Yeah I read that as a caveat to the larger point, i.e. just acknowledging that there are limited cases where the use of synthetic training data has been shown to be useful.