Google Maps Now Uses AI to Find Where People Are Having Fun

Google Maps Now Uses AI to Find Where People Are Having Fun

gizmodo.com

Google Maps Now Uses AI to Find Where People Are Having Fun

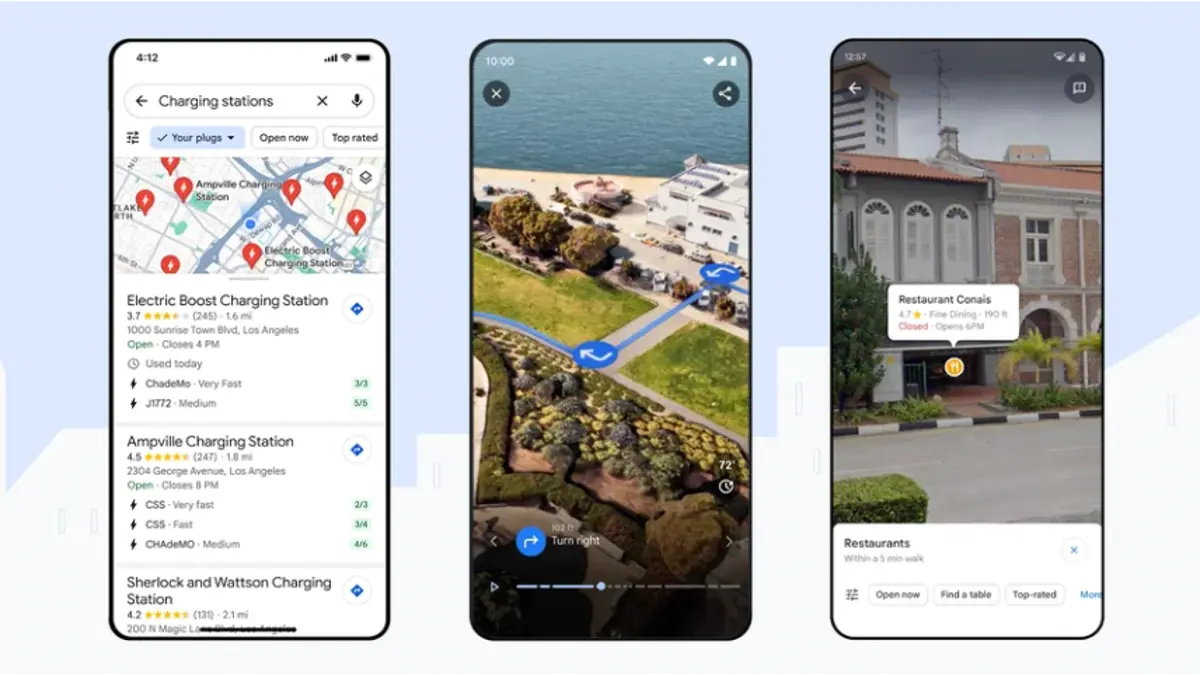

Google is improving Maps with features including where to find EV charging stations and in-depth visualization.